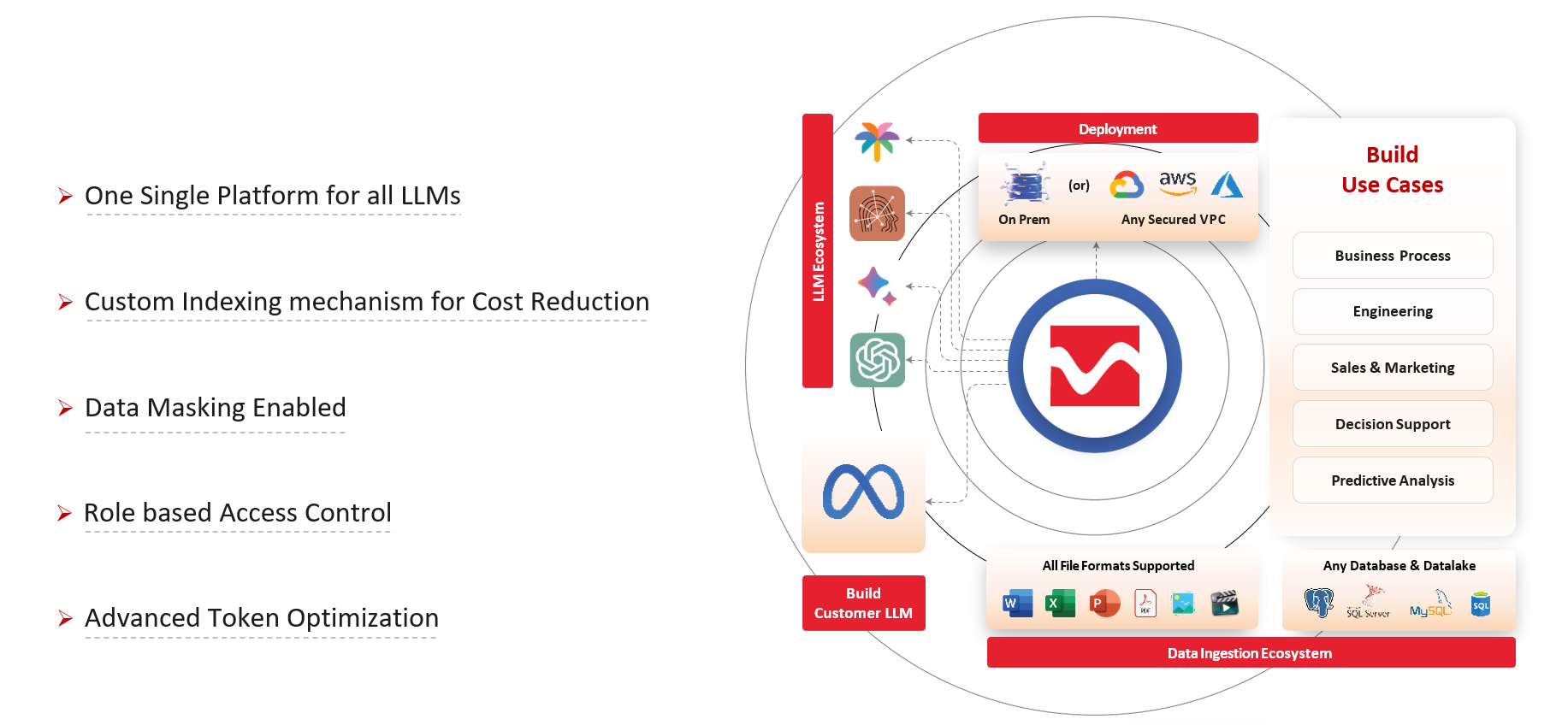

VenusGeo Enterprise AI Framework

Architected for Hyper-Scalability, Model Flexibility, and Enterprise-Grade Security.

The VenusGeo AI Framework is a cloud-agnostic, modular AI architecture purpose-built for large-scale enterprise deployments. It unifies model orchestration, multi-environment execution, data integration, and optimization strategies under a single, extensible platform — empowering organizations to operationalize AI at speed and scale.

Benefits

Orchestration Layer

A distributed, API-first orchestration layer enabling seamless interoperability across LLMs, vector databases, enterprise APIs, and third-party services. Built on event-driven microservices and containerized runtimes for low-latency, high-throughput inference workflows.

Secure & Controlled Access with RBAC

Implement Role-Based Access Control (RBAC) to define and restrict AI access based on user roles, ensuring that sensitive operations and data remain protected from unauthorized use.

Key Architectural Pillars

A distributed, API-first orchestration layer enabling seamless interoperability across LLMs, vector databases, enterprise APIs, and third-party services. Built on event-driven microservices and containerized runtimes for low-latency, high-throughput inference workflows.

Supports dynamic deployment across on-premise data centers, public cloud providers (AWS, Azure, GCP), air-gapped environments, or secure VPN-based access layers. Enables policy-driven workload allocation based on latency, cost, compliance, or data sovereignty.

Engineered for model-agnostic integration with next-gen LLMs including LLAMA 2, Zephyr, Gemini, OpenAI GPT, and other transformer-based architectures. Enables rapid model swapping, benchmarking, and ensemble strategies through standardized adapters.

Fine-tunes foundation models on enterprise-grade, domain-specific corpora. Incorporates reinforcement learning (RLHF), quantization, and parameter-efficient tuning (LoRA/PEFT) to deliver performant, compact, and contextually relevant models tailored to organizational data assets.

Ingests structured and unstructured data from heterogeneous sources (CRMs, ERPs, data lakes, streaming APIs) with schema-aware parsing, role-based access controls (RBAC), and zero-trust data movement principles. Supports batch, real-time, and federated data pipelines.

Implements advanced tokenization strategies, prompt compression, and dynamic context windows to reduce model execution costs while preserving inference accuracy. Embedded token-aware routing and caching reduce cold start latency and cloud utilization.

Leverages proprietary high-dimensional vector embedding techniques integrated with RAG frameworks and vector stores (e.g., FAISS, Pinecone, Weaviate). Enables high-precision semantic search, personalization, and knowledge-grounded generation at scale.

Strategic Differentiation for the Enterprise

Our Key Differentiators:

Seamless integration across On-premise, Cloud, or VPN architectures, providing full control over your infrastructure landscape.

Engineered to optimize enterprise-grade policy retrieval and compliance workflows, ensuring high availability and consistency.

Leveraging state-of-the-art memory management and caching algorithms to enhance system performance and drive cost-efficiency.

Open, modular framework that ensures seamless model interchangeability and scalable deployment without vendor constraints.

Robust, multi-layered encryption and real-time data masking technologies, safeguarding sensitive information throughout its lifecycle.